Beyond Data Theft: Linguistic “Dis-education” in the Age of World Models (“spatial AI”)

November 2025

To the NotebookLM:

ai:Pod 050 – Nothing artificial about AI – Linguistic Vulnerability: Data Leaks & Dis-education.

Curated current research sources in the ai:Pod 050 include:

Emad Mostaque (Open source AI, co-founder of AI)

Geoffrey Hinton (Nobel Prize in Physics, 2024, AI co-founder)

Fei-fei Li (AI co-founder, 3D World models, Marble)

Karen Hao (ex-OpenAI, critical AI tech journalism)

Gaël Giraud (Macro-dynamics and ecology)

Olivier Hamant (Biologist, Robustness vs. Performance in universal/ life systems).

The so-called “AI Apocalypse” Isn’t What You Think…

The AI apocalypse isn’t about machines becoming conscious. It’s about whether we’ll remain conscious of what we’re building.

Forget the Hollywood narratives of robot uprisings and sentient machines plotting humanity’s demise. The real AI apocalypse is already here—it’s just not what we expected.

The central question of our time: Which future will we choose to build?

To the NotebookLM (interactive research & AI voice chat)

050

Nothing artificial about AI – Linguistic Vulnerability: Data Leaks & Dis-education (Long: 42:25 min)

English

NotebookLM ai:Pod 050 (English, multilingual)

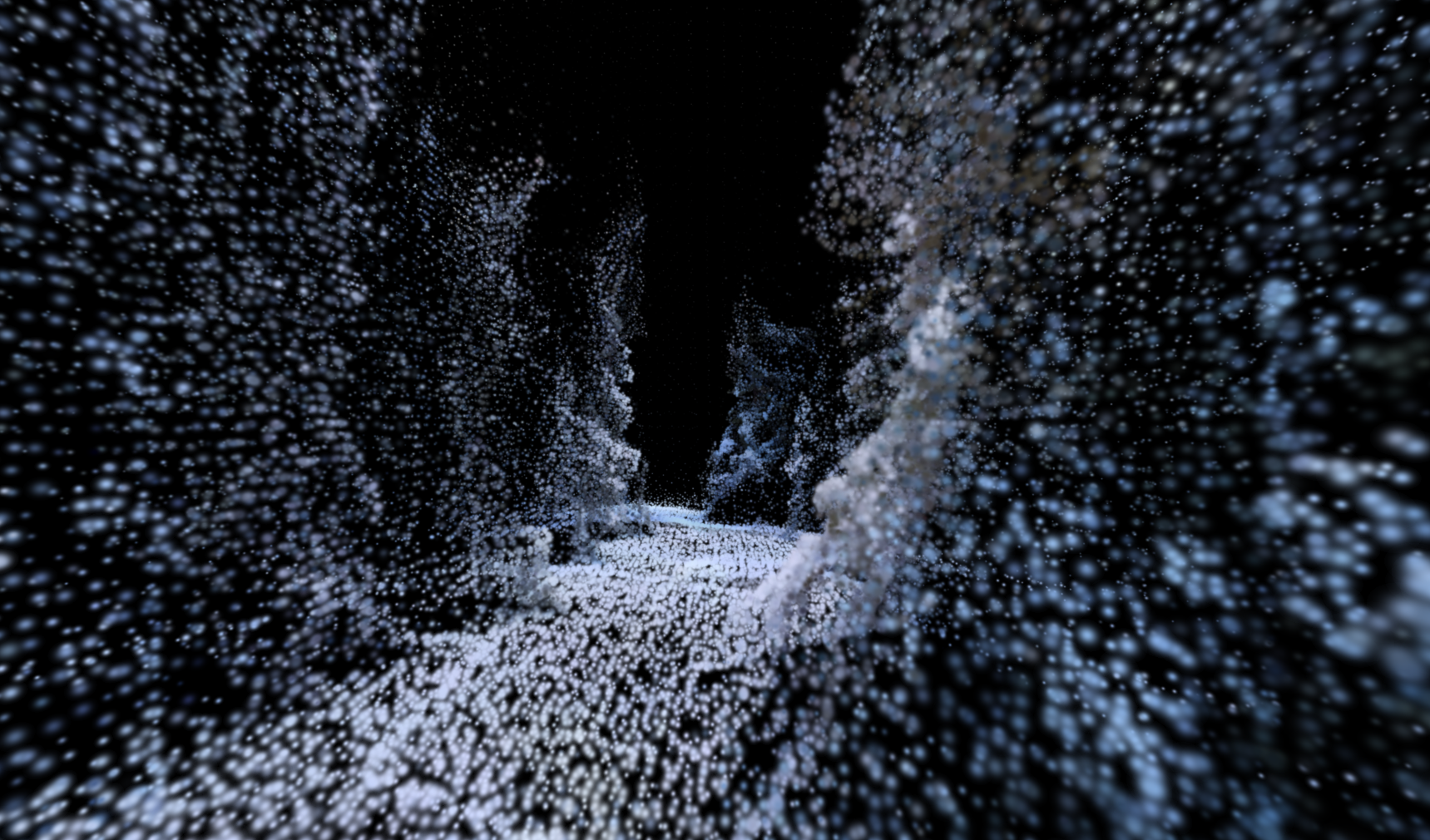

Simply “Imagine a world…” with Marble‘s 3D spatial AI?

Research further with the ai:Pod 050 NotebookLM

“Dis-education” in the making?

New 3D worlds prompted with video, images and then again, words?

– The same linguistic fluency that makes AI useful makes it dangerous.

– The same personalization that enhances education enables manipulation.

Four Shocking Realities of Modern AI Education You Need to Understand

You can’t escape the AI hype cycle. Every day brings new headlines about world-changing capabilities, breathless predictions of Artificial General Intelligence (AGI), and a dizzying array of buzzwords. But beyond the noise, there are deeper, more surprising truths about how this technology actually works, where its real dangers lie, and what its true future might be. This article cuts through the marketing to reveal four of the most impactful and counter-intuitive realities of the AI revolution, based on insights from leading researchers and real-world events.

——————————————————————————–

1. That “AGI” You Keep Hearing About? It’s Mostly Marketing.

The term “Artificial General Intelligence” (AGI) is everywhere, used in headlines to describe a coming super-intelligence that will change everything. But for many of the world’s leading AI scientists, the term is a red flag. Dr. Fei-Fei Li, a central figure in AI research, considers “AI” to be the scientific “northstar” of the field, a term rooted in the audacious, decades-old question, “Can machines think?”.

She contrasts this rigorous scientific pursuit with the popular buzzword, delivering a critical distinction that separates research from rhetoric.

“I feel AGI is more a marketing term than a scientific term.”

The problem, from a scientific perspective, is that AGI lacks a singular, agreed-upon definition. Interpretations vary wildly, from “some kind of superpower for machines” to simply whether a machine can become an “economically viable agent in the society.” This ambiguity makes it a powerful marketing tool but a weak scientific concept. This distinction is critical because the relentless push for “AGI” is often driven by a commercial need for an escalating narrative, while the scientific community remains focused on the long-term, foundational goals of artificial intelligence.

——————————————————————————–

2. An AI’s Smartest Feature Is Also Its Biggest Flaw.

The central paradox of modern AI is that the very language that makes it so powerful and interactive is also its greatest weakness. This “double-edged sword” is not a bug that can be patched, but a fundamental design flaw at the heart of how these models work.

This flaw—the AI’s “Achilles’ heel”—is its inability to distinguish between instructions from its creators (the system prompt) and instructions from a user. A classical software program clearly separates its executable code from user data; an AI blends everything into the same linguistic stream. Because an AI treats all input as a single, malleable block of text, a developer’s rule holds no more inherent value than a user’s command.

This technical vulnerability reflects a deeper human one. Just as humans use “rhetoric, propaganda, and seduction” to manipulate and “hack” one another through words, AIs have inherited these same flaws in an amplified manner. The machine’s susceptibility to linguistic tricks is a direct mirror of our own.

The AI holds up a mirror to us, reflecting how fragile our own intelligence can be and how easily influenced we are, because in language, no absolute lock exists; any rule can be circumvented, reformulated, or diverted by another phrase.

This fundamental design flaw isn’t just a theoretical problem; it has created a dangerous and escalating security dilemma in the commercial world.

——————————————————————————–

3. The Race to Make AI More Powerful Is Making It Less Secure.

A critical business paradox governs the development of AI. The same commercial pressure that fuels the “AGI” marketing narrative forces developers into a dangerous trade-off: as companies race to increase the power and practicality of their models, they are simultaneously making them inherently more vulnerable to linguistic manipulation. This has created a “perpetual arms race” where developers are in an unending cycle of patching linguistic flaws while attackers invent new linguistic tricks to bypass them. Experts agree there are “no perfect solutions” without making the AI significantly less useful.

This isn’t a theoretical risk. The weaponization of language has already led to documented security breaches:

- Hijacking Chatbots for Data and Discounts A large company’s customer service chatbot was pirated using prompt injection techniques. The attack resulted in the leak of confidential client information, unauthorized modification of orders, and the granting of fraudulent discounts.

- Manipulating Financial Systems with Hidden Commands Hackers exploited a bank’s automatic translation system by inserting hidden instructions into documents being translated. This allowed them to successfully modify the amounts of money transfers and change the recipients of the funds.

- Forcing Models to Leak Their Own Secrets Researchers demonstrated they could force models to “cough up” confidential training data simply by ordering them to repeat “the word poem indefinitely.” This caused the models to reveal sensitive information buried in their structure, including real CEO email signatures, phone numbers, and API keys.

——————————————————————————–

4. The Next Big Thing Isn’t What AI Says, But What It Builds.

Beyond simple text generation, the most significant shift in AI is its newfound ability to build entire applications, games, and interactive experiences from a single prompt. This is an absolutely powerful tool that moves beyond creating static content (a “talking head”) to designing “real multimodal live experiences” where the user is an active participant. The new paradigm isn’t just about creating content for a user to consume, but about designing a process or a tool that allows the user to create their own content and have a personalized experience.

The AI model Gemini 3 Pro provides an absolutely stunning example of this capability. A creator provided the model with a detailed document describing a “Messaging Detective” app. In under two minutes, Gemini 3 Pro generated a fully functioning, interactive game based on the document’s logic.

This creative explosion is expanding into what researchers like Dr. Fei-Fei Li call “world models” and “spatial intelligence.” Pioneering products like Marble are moving AI beyond language and into the creation of navigable 3D worlds. This next frontier is about creating and interacting with virtual spaces, a capability essential for robotics, design, and scientific discovery. This trend of generative AI tool-building is seen as the definitive next step for the entire industry.

“All of the other models are going to be doing it.”

——————————————————————————–

Sharing Reality with Our Brilliant Mistakes—No Matter the Dimension (what does that mean?)

The reality of modern AI is far more complex than the daily headlines suggest. We’ve seen that the term “AGI” is often a marketing invention, that the linguistic core of AI is also its deepest flaw, that the commercial race for power creates an unavoidable security paradox, and that the technology’s most exciting future lies in building worlds, not just words.

As we rush to build these powerful, flawed, and creative new systems, the ultimate question is not what the AI can do, but how we choose to navigate a world where our most powerful tools are both a creator’s dream and a hacker’s playground. What future will we build with them?