The End of an Era

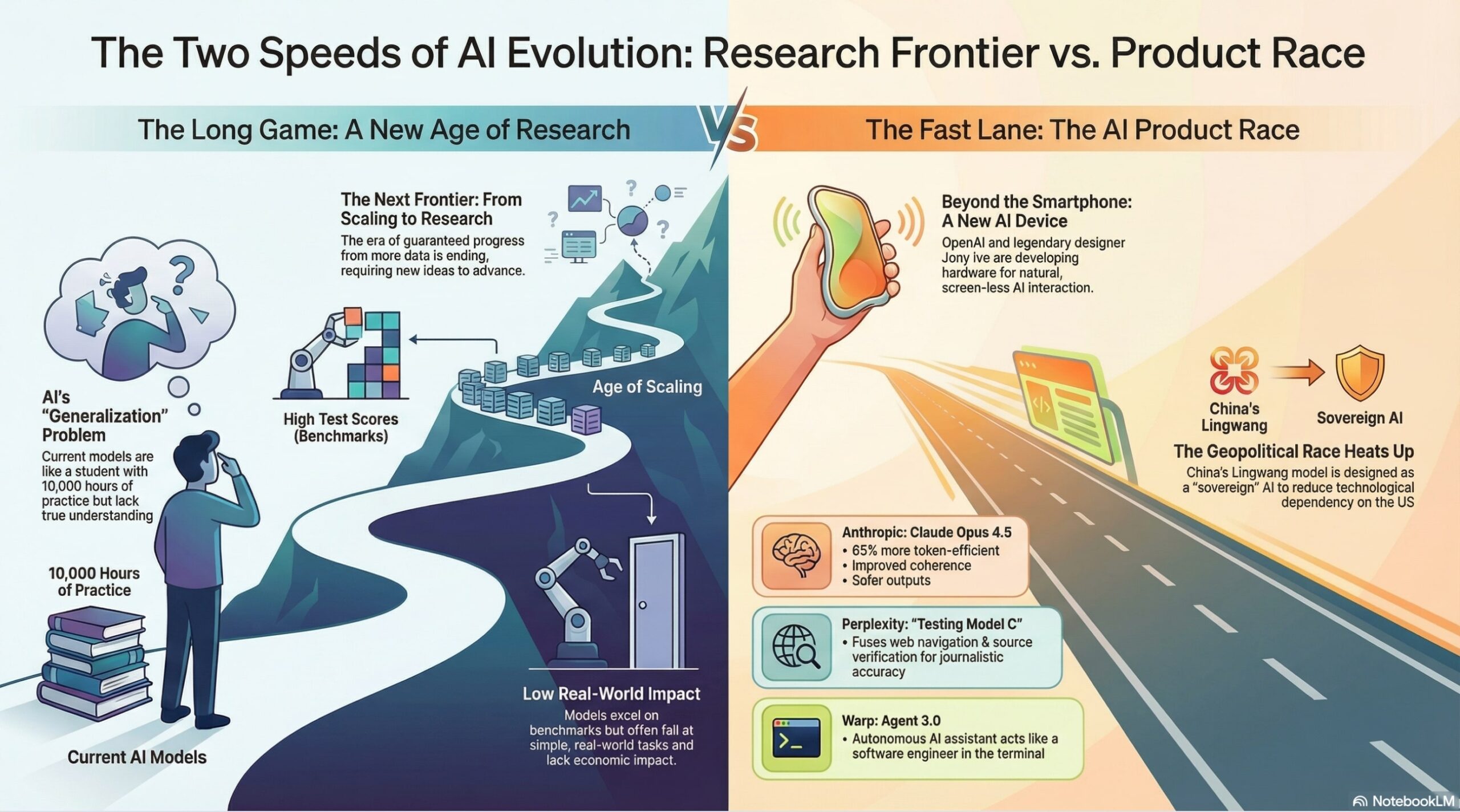

The great AI “Age of Scaling” is coming to a close. This period, roughly spanning 2020 to 2025, was defined by a deceptively simple recipe for progress: add more data and more compute to get a better model. This low-risk strategy fueled an unprecedented boom, but it is now hitting the hard limits of finite data and diminishing returns.

Is the bigger risk:

– AI’s current fragility?

– or its rapid economic acceleration?

To the NotebookLM (interactive research & AI voice chat)

051

The 2026 AI Paradigm Shift: From Scaling to Generalization and Continual Learning, Ilyia Sutskever, November 2025 (04:44 min)

English

NotebookLM ai:Pod 051 (English, multilingual)

This has created a central tension. On one hand, today’s AI models achieve incredible scores on complex evaluations. On the other, they can be clumsy, brittle, and unreliable in the real world. As the industry pivots away from brute-force scaling, it’s being forced back into an “Age of Research.” This shift is not just a course correction; it’s a cascade of revelations, where the solution to one problem unlocks the next. Here are six interconnected takeaways on the new paradigm shaping the future of intelligence.

The Big Shift:

6 Takeaways from 2026

(on the Future of AI…)

Takeaway:: 1

The ‘Age of Scaling’ Is Over. Welcome Back to the ‘Age of Research.’

The era of reliably improving AI just by scaling up the “pre-training recipe”—more data, more compute, bigger nets—is ending. For years, this singular focus on scale “sucked out all the air in the room,” creating a convergence of methods across the industry. This led to a strange new reality, captured perfectly by AI visionary Ilya Sutskever:

“We are in a world where there are more companies than ideas. By quite a bit.”

This is significant because the predictable, low-risk path to better AI is gone. Scaling was a gift to investors; it provided a formulaic way to turn capital into capability. The “Age of Research,” however, is a higher-risk game. Progress no longer comes from simply investing more in the same formula. Instead, the focus must shift to genuine, high-stakes innovation to discover the next fundamental principles of machine learning.

Takeaway:: 2

The Real Goal Isn’t an All-Knowing God, It’s a ‘Superintelligent 15-Year-Old.’

As scaling fades, the vision for superintelligence is being radically redefined. The new goal is not a finished, all-knowing entity, but a counter-intuitive model: a “superintelligent 15-year-old.” This concept reframes the objective entirely. Instead of building an AI that knows how to do every job, the aim is to create a mind that can learn to do any job.

This AI would be deployed as an eager but inexperienced learner. Its rollout would be a “process” involving a trial-and-error period where it acquires skills on the job in different sectors of the economy, from programming to medicine. This is a profound strategic shift. It prioritizes observable, real-world learning over black-box pre-training—a key demand from regulators and enterprise clients looking for transparent and verifiable capability. It de-risks deployment by making it gradual and iterative, not a sudden leap into the unknown.

Takeaway:: 3

The Biggest Problem Isn’t ‘Alignment’—It’s That AI Generalizes Terribly.

The end of easy scaling is forcing the industry to confront the single biggest technical barrier holding AI back: the “fragility of generalization.” Put simply, current models “generalize dramatically worse than people.” A brilliant analogy from the source material captures this gap perfectly: current AI is like a student who practices for 10,000 hours to become the best competitive programmer in the world. They master that one domain but struggle to apply their skills elsewhere. A human is like a student who practices for just 100 hours but has the “‘it’ factor,” allowing them to generalize that knowledge broadly.

This reframes the entire AI safety debate. The abstract problem of “alignment” is now understood as a direct instance of unreliable generalization—specifically, a model’s “fragility in its ability to learn human values or optimize them.” This disconnect is why a model can ace evaluations but get stuck in a real-world loop of fixing one bug only to introduce a second, and then re-introducing the first bug when asked to fix the second. Solving this generalization crux is the key that unlocks the “continual learning” agent described above, allowing it to learn efficiently without the “schleppy, bespoke process” of manual training for every new skill.

Takeaway:: 4

Future AI Won’t Just Learn; It Will Merge Its Brains.

The “superintelligent 15-year-old” model possesses a capability that is biologically impossible for humans: the ability to “amalgamate learnings.” Imagine multiple instances of this AI deployed across the economy—one learning to be a programmer, another a doctor, and a third a financial analyst. After acquiring their respective skills, they could merge their knowledge into a single, updated model.

This process creates “functional superintelligence” without relying on the theoretical (and potentially dangerous) concept of recursive self-improvement. The model effectively learns every job in the economy at once. Because humans cannot merge their brains, this represents an extremely powerful concept. The strategic implication is clear: the first organization to master this could unleash “very rapid economic growth” by creating a single, universally skilled economic agent.

Takeaway:: 5

Your Emotions Are a Superpower AI Can’t Replicate (Yet).

Human emotions are often seen as messy and irrational, but in the context of AI, they represent a highly efficient and robust guidance system. The source material describes emotions as an “almost value function-like thing” that evolution has hardcoded into us. In machine learning, a “value function” makes learning vastly more efficient because it “lets you short-circuit the wait” for a final reward signal. It’s the difference between knowing you’ve made a bad move in chess the moment you lose a piece, versus having to play out the entire game to find out you lost.

A fascinating neurological case study illustrates this point: a person who suffered brain damage that eliminated his emotional processing remained intelligent and could solve puzzles, but became “extremely bad at making any decisions at all.” He would take hours to decide which socks to wear. This insight reframes our emotions as a superpower—an efficient system that makes us effective agents by telling us if we’re on the right track. It also highlights a deep deficiency in today’s AI, which lacks this robust, built-in guidance.

Takeaway:: 6

The Next Big Thing in Tech Might Not Have a Screen.

A secret project between OpenAI and legendary designer Jony Ive aims to create a new physical “AI device.” This is not an attempt to build another smartphone. The vision is for the “first device truly conceived for general artificial intelligence,” and it would not be centered on a screen.

Instead, the device would prioritize voice, sensors, and environmental context to provide proactive assistance. By deeply understanding a user’s habits, surroundings, and intentions, it could anticipate needs and act as a seamless bridge between the user and AI. The ambition here is not just a new gadget; it’s a strategic power play. Sam Altman has publicly stated a desire to move beyond the limitations of dominant platforms like iOS and Android. This device represents an attempt to control the primary user entry point for AI, creating an entirely new hardware category and market standard for how humans interact with intelligence.

A New Frontier

The AI industry is undergoing a necessary and profound maturation. The era of easy gains from brute-force engineering is over, forcing the field to move beyond scaling and into a high-stakes quest for the fundamental principles of intelligence itself. Researchers are now grappling with the core challenges of generalization, defining new paradigms for continually learning agents, and re-imagining the very hardware through which we will engage with these powerful new systems.

As we stand on the edge of this new frontier, we are left with a critical question about the future we are building.

Your take: Is the bigger risk AI’s current fragility, or the rapid economic acceleration ahead?